Architecture

As the model size of pre-trained language models (PLMs) grows rapidly, full fine-tuning becomes prohibitively expensive for model training and storage. In vision-and-language (VL), parameter-efficient tuning (PET) techniques are proposed to integrate modular modifications (e.g., Adapter and LoRA) into encoder-decoder PLMs. By tuning a small set of trainable parameters, these techniques perform on par with full fine-tuning. However, excessive modular modifications and neglecting the functionality gap between the encoders and decoders can lead to performance degradation, while existing PET techniques (e.g., VL-Adapter) overlook these critical issues.

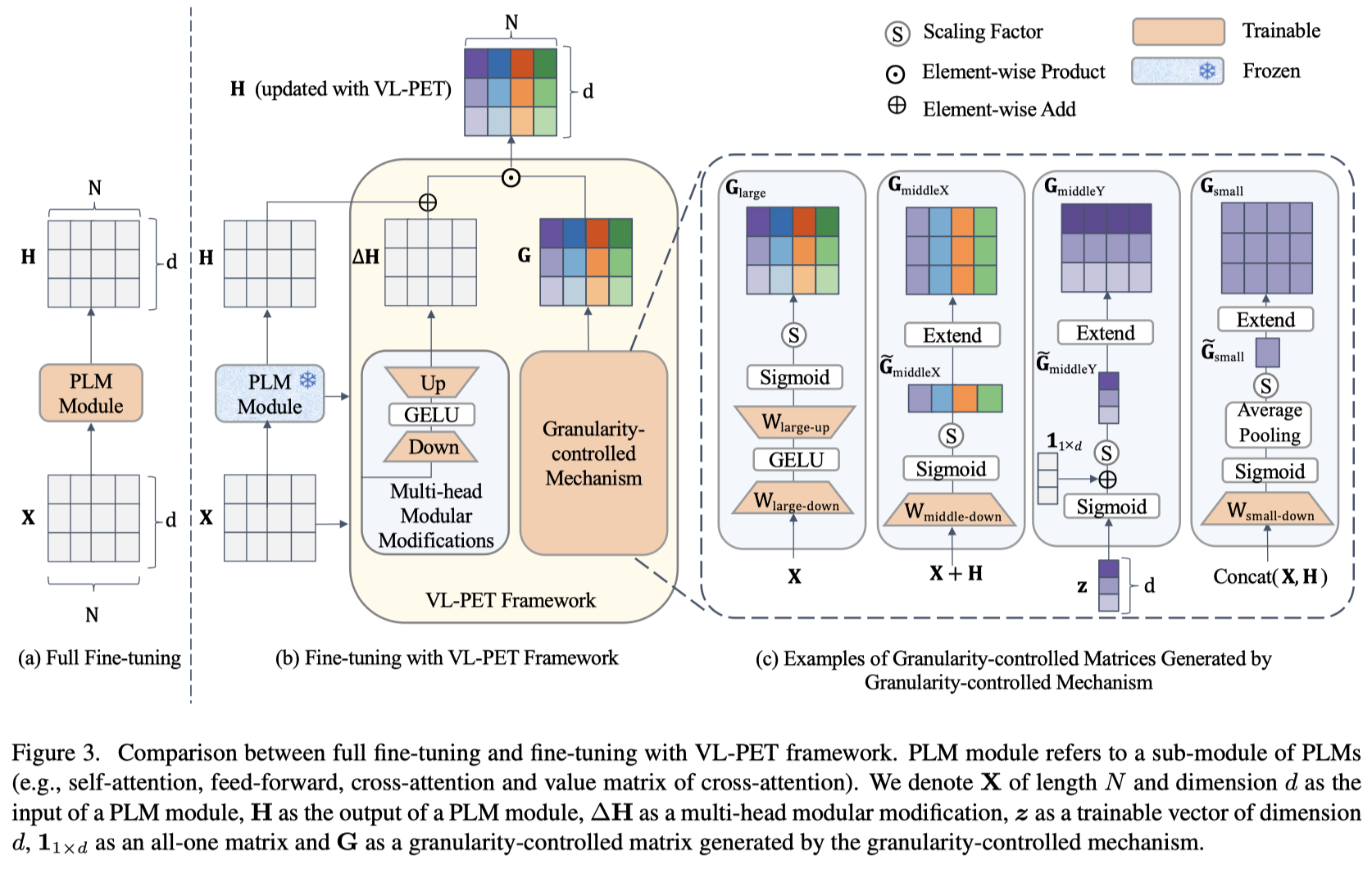

In this paper, we propose a Vision-and-Language Parameter-Efficient Tuning (VL-PET) framework to impose effective control over modular modifications via a novel granularity-controlled mechanism. Considering different granularity-controlled matrices generated by this mechanism, a variety of model-agnostic VL-PET modules can be instantiated from our framework for better efficiency and effectiveness trade-offs. We further propose lightweight PET module designs to enhance VL alignment and modeling for the encoders and maintain text generation for the decoders.

Extensive experiments conducted on four image-text tasks and four video-text tasks demonstrate the efficiency, effectiveness and transferability of our VL-PET framework. In particular, our VL-PET-large with lightweight PET module designs significantly outperforms VL-Adapter by 2.92% (3.41%) and LoRA by 3.37% (7.03%) with BART-base (T5-base) on image-text tasks. Furthermore, we validate the enhanced effect of employing our VL-PET designs on existing PET techniques, enabling them to achieve significant performance improvements.

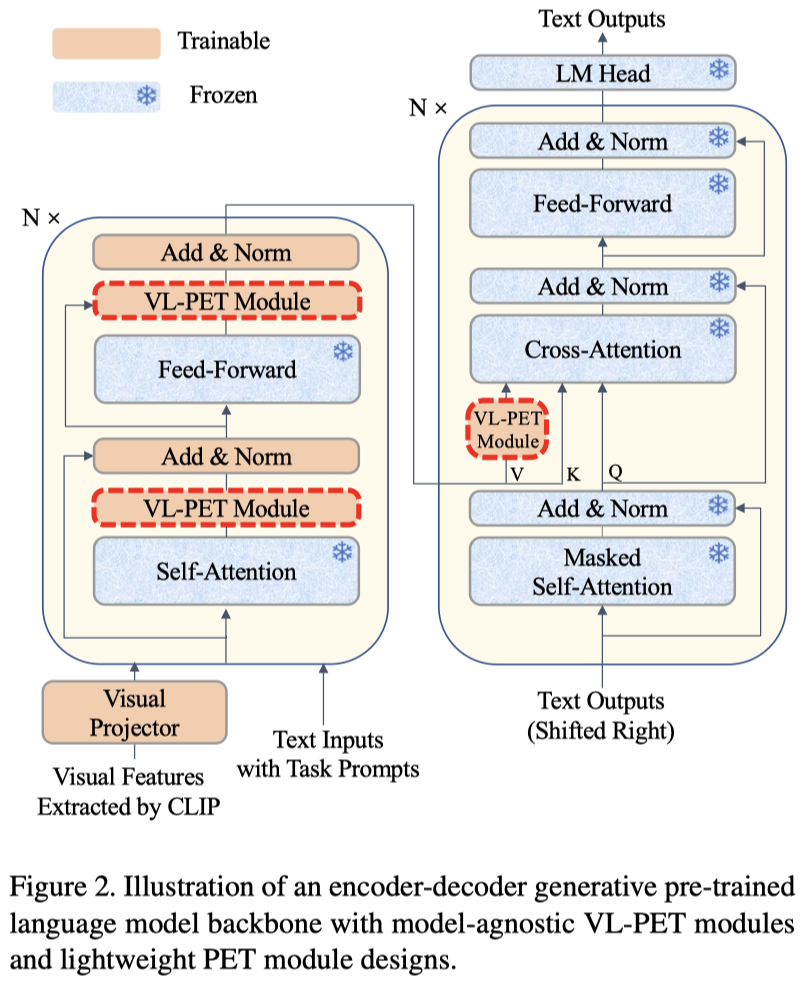

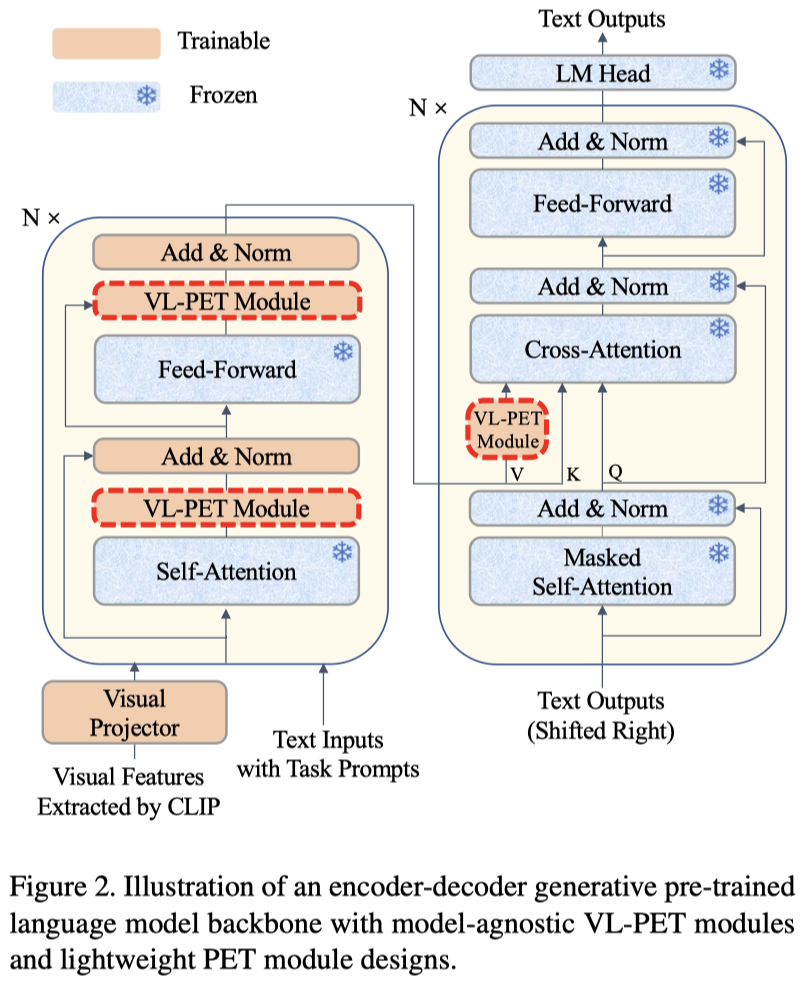

In this section, we propose a novel Vision-and-Language Parameter-Efficient Tuning (VL-PET) framework for encoder-decoder generative PLMs. An illustration of our model is shown in Figure 2.

We propose a novel granularity-controlled mechanism to generate a granularity-controlled matrix at different granularity control levels, which regulates the output of the modular modifications introduced by PET techniques. As shown in Figure 3 and Table 1, considering different granularity control levels and a multi-head modular modification, a variety of model-agnostic VL-PET modules can be instantiated from the proposed VL-PET framework. We further propose lightweight PET module designs to facilitate suitable VL-PET module integration into the encoders and decoders.

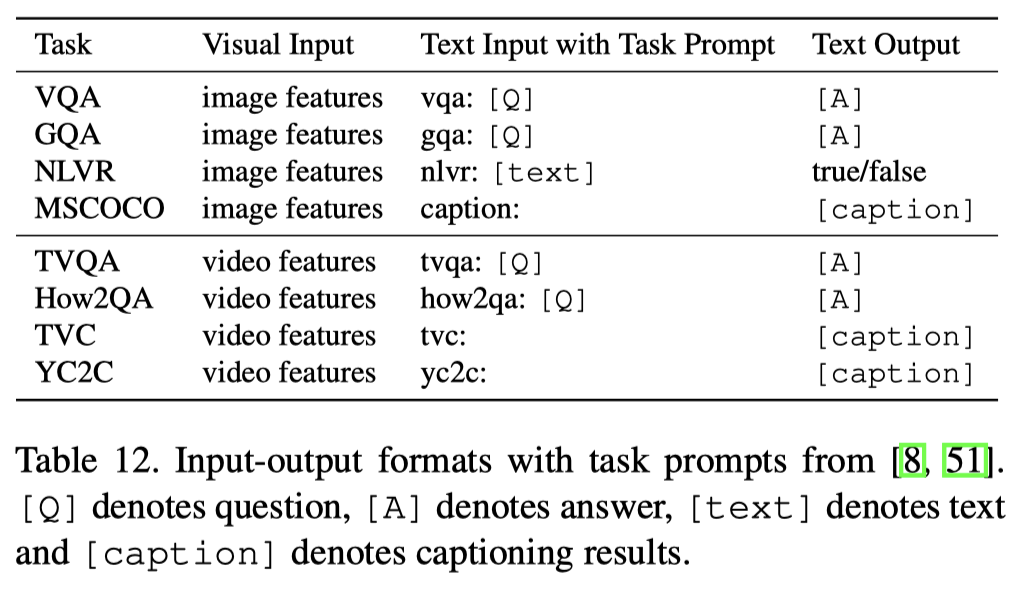

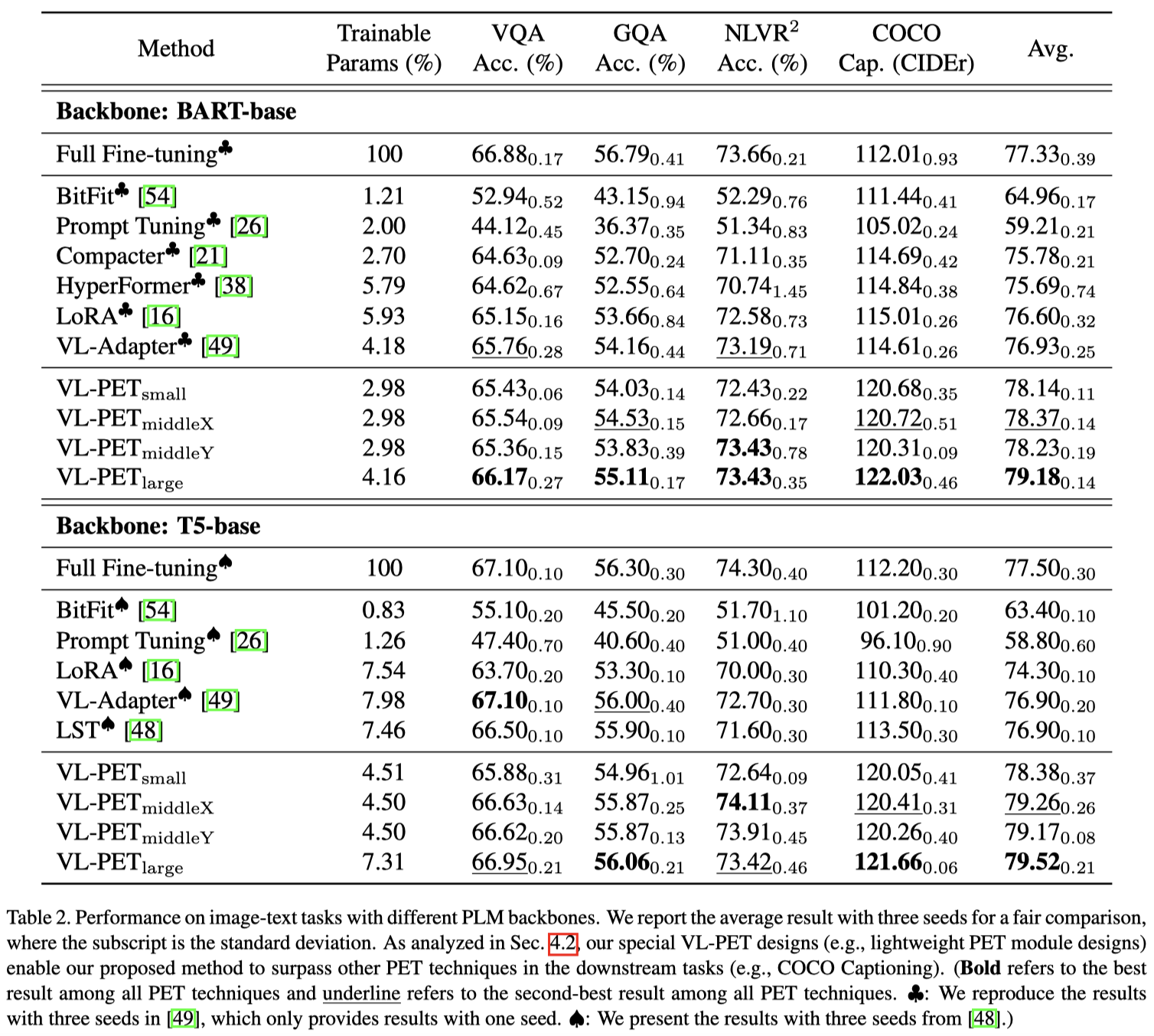

To valid the efficiency, effectiveness and transferability of our VL-PET framework and model-agnostic VL-PET modules, we conduct experiments on image-text tasks with different PLM backbones (i.e., BART-base and T5-base).

Table 2 has shown us the performance of full fine-tuning, state-of-the-art PET techniques and four VL-PET instantiations on image-text tasks with BART-base. All of our four VL-PET modules with lightweight PET module designs significantly outperform other PET techniques, as shown in Figure 1, which demonstrates the efficiency and effectiveness of the proposed VL-PET framework. Specifically, VL-PET-large outperforms VL-Adapter and LoRA in all downstream tasks. VL-PET-large relatively surpasses VL-Adapter by 2.92% with comparable trainable parameters (4.16% < 4.18%) and LoRA by 3.37% with fewer trainable parameters (4.16% < 5.93%). VL-PET-small, VL-PET-middleX and VL-PET-middleY also surpass VL-Adapter by 1.57%, 1.87% and 1.69% respectively, while utilizing far fewer trainable parameters (2.98% < 4.18%). We observe that our VL-PET method performs comparable to or even outperform full fine-tuning, while most of the gains can be attributed to improvements in the COCO captioning task. This phenomenon justifies the necessity of our special VL-PET designs (e.g., lightweight PET module designs) for encoders and decoders, which helps to preserve the text generation ability of the pre-trained decoders.

Since the instantiated VL-PET modules are model-agnostic modules, we transfer them to another larger PLM backbone, i.e., T5-base. As shown in Table 2, the observed trends of performance improvement of VL-PET modules in T5-base remain consistent with those observed in BART-base. All of four VL-PET modules with lightweight PET module designs significantly outperform other PET techniques. In particular, VL-PET-large outperforms LST, LoRA and VL-Adapter on most downstream tasks with fewer trainable parameters (7.31% < 7.46% < 7.54% < 7.98%), except for slightly lower performance on VQA compared to VL-Adapter. Specifically, VL-PET-small, VL-PET-middleX, VL-PET-middleY and VL-PET-large surpasses VL-Adapter and LST by 1.92%, 3.07%, 2.95% and 3.41% respectively. They also surpasses LoRA by 5.49%, 6.68%, 6.55% and 7.03% respectively. The performances of the VL-PET modules have shown a significant improvement in a larger PLM. However, other PET techniques do not exhibit a similar improvement and some of them even perform was even worse than their BART-base counterparts. These results demonstrate the effectiveness, efficiency, and transferability of our proposed VL-PET framework.

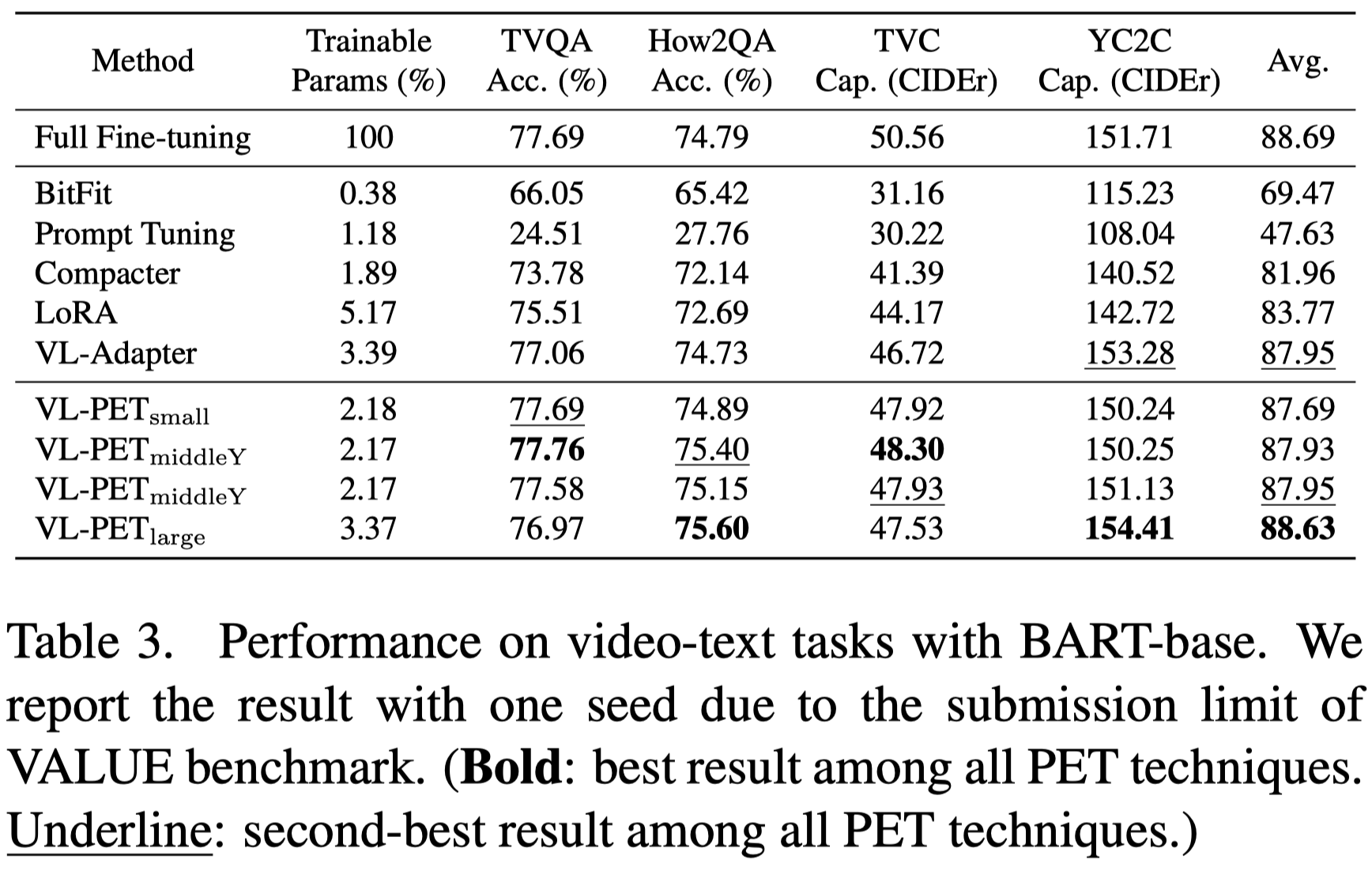

In Table 3, we also test our instantiated VL-PET modules with lightweight PET module designs on video-text tasks.

The four VL-PET modules outperform most state-of-the-art PET techniques and attain performance comparable to full fine-tuning with the BART-base backbone. Concretely, VL-PET-large surpasses VL-Adapter by 0.77% with comparable trainable parameters (3.37% < 3.39%) and LoRA by 5.80% with fewer trainable parameters (3.37% < 5.17%). VL-PET-small, VL-PET-middleX and VL-PET-middleY perform on par with VL-Adapter with fewer trainable parameters (2.18% < 3.39%) and also outperform LoRA by a large margin. These results reveal the efficiency and effectiveness of our VL-PET framework.

Please refer to our paper for the details of ablation studies. :)

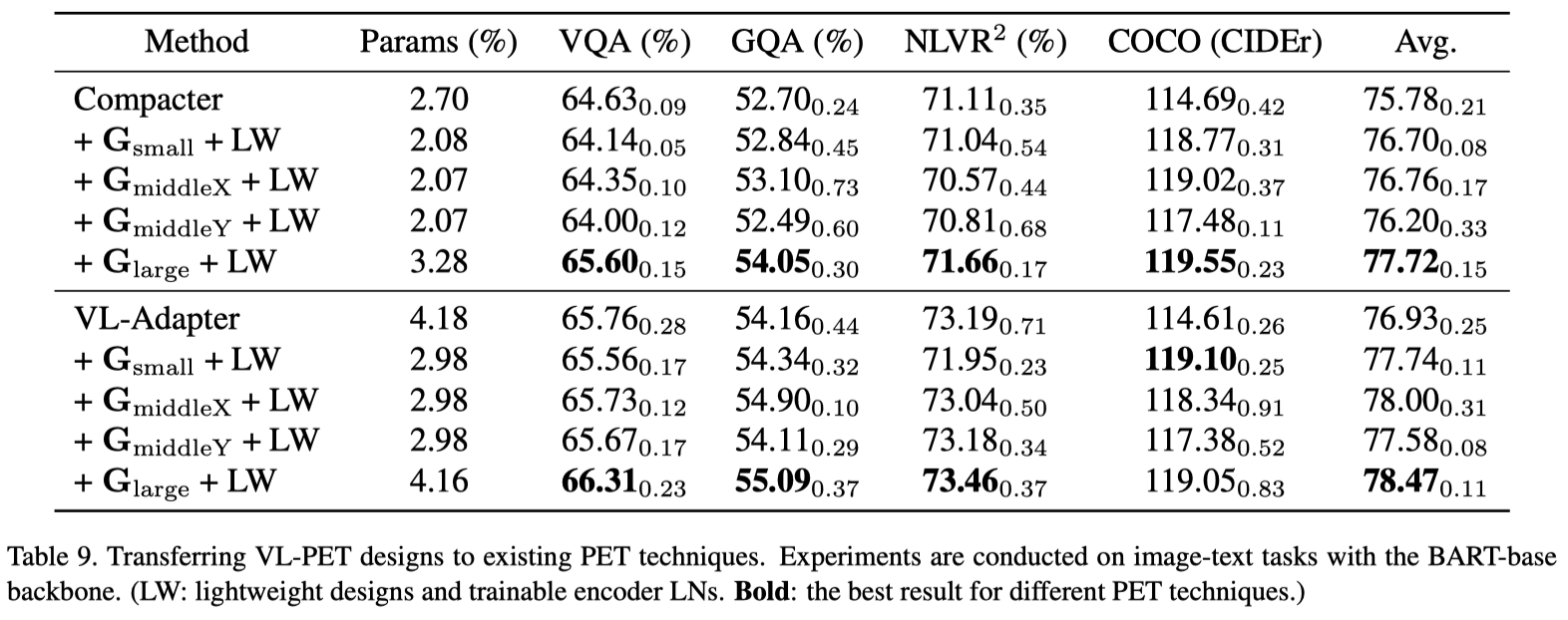

In this section, we validate the transferability of some of our VL-PET designs to state-of-the-art PET techniques (e.g., Compacter and VL-Adapter). As described in Sec. 3.2 and Sec. 3.3, we first impose effective control over the modular modifications introduced by these PET techniques. To simplify the validation of lightweight PET module designs, we only retain the decoder PET modules in the cross-attention modules from their conventional designs. As done in VL-PET, we similarly freeze the PLM backbone, except for the encoder LNs.

Results in Table 9 demonstrate that applying our VL-PET designs to existing PET techniques leads to significant performance improvements. In particular, Compacter and VL-Adapter with G-middleX outperform their original versions by 1.29% and 1.39%, respectively, while utilizing fewer trainable parameters (2.07% < 2.70% and 2.98% < 4.18%). Compacter and VL-Adapter with G-large even outperform their original performance by 2.56% and 2.00%, respectively. These results again validate the universality of our VL-PET designs.

The granularity-controlled mechanism described in Sec. 3.2 dynamically assigns importance weights to each element in the intermediate hidden states. To gain more insight into how it works, some visualizations are provided in Figure 5, where we visualize the heatmap of G-large in the first encoder self-attention module of BART-base (hidden dimension d=768).

Given two randomly picked inputs from VQAv2 and NLVR2, G-large changes dynamically based on the inputs and thus assigns different importance weights to the hidden states. For some text tokens, large weights are densely assigned to almost all of their elements. For other tokens (especially vision tokens), large weights are sparsely distributed on their elements. Such learned weight assignment strategies attest that our granularity-controlled mechanism is a non-trivial method.

If you find VL-PET useful for your research, please consider giving this repository a star and citing our paper as follows:

@inproceedings{hu2023vlpet,

title = {VL-PET: Vision-and-Language Parameter-Efficient Tuning via Granularity Control},

author = {Zi-Yuan Hu, Yanyang Li, Michael R. Lyu and Liwei Wang},

booktitle = {ICCV},

year = {2023}

}

We borrow our logo from OpenMoji because it looks like a cute and colorful pet puppy. However, upon closer inspection, one may discover that it is in fact a donkey! But hey, just imagine having a donkey as our pet! That wouldn't be too bad, would it? :)